LightPFP

To simulate more complex phenomena with greater fidelity, Matlantis introduces a powerful new option in 2025: LightPFP. In this article, we present a comprehensive look at its capabilities and impact.

What is LightPFP?

LightPFP is a feature that enables the construction of lightweight machine learning potentials tailored to specific research targets, using prediction results from PFP, the general-purpose MLIP of Matlantis, as training data.

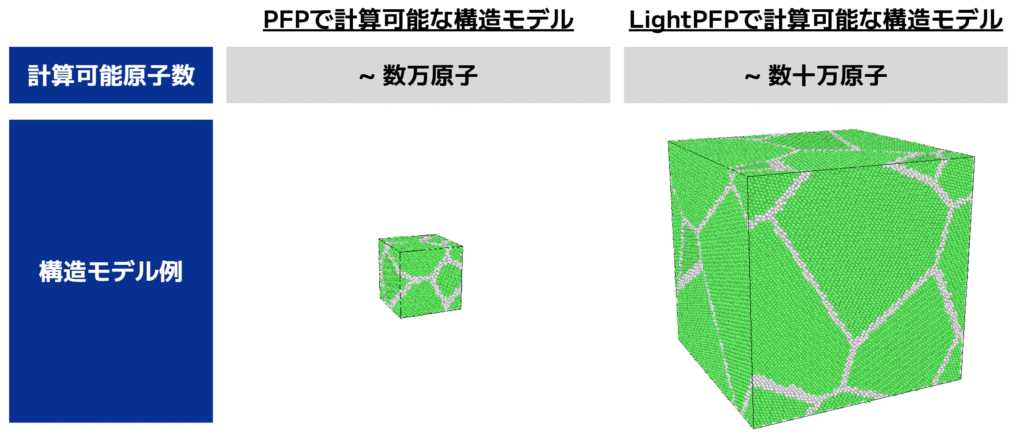

Because it is significantly lighter, LightPFP allows for faster inference than PFP, and increases the maximum simulation scale from tens of thousands to hundreds of thousands.

This expansion makes it possible to simulate more complex phenomena and larger-scale systems that even PFP alone could not handle.

In general, constructing a machine learning potential requires generating large amounts of training data through quantum chemical calculations, a process that often takes several months or more. However, by leveraging PFP, which combines speed, accuracy, and versatility, it is possible to build high-performance potentials in just a few days.

| Traditional Method | With LightPFP | |

| Data Construction | Preparing DFT calculation results (Requires ~500 GPU-days) | Preparing PFP calculation results in Matlantis (Requires <1 GPU-day) |

| Training | Training the model (Depends on computing resources, ~3 GPU-days) | Leveraging the Matlantis environment and pre-trained models (Requires <1 GPU-days) |

| Evaluation | Comparing with DFT calculation results and experimental data | Comparing with PFP calculation results and experimental data |

| Time Required to Complete Construction | A few months | A few days |

Features That Support LightPFP

In LightPFP, we offer the Moment Tensor Potential (MTP) architecture for building AI models trained on data generated by PFP. MTP achieves an excellent balance between computational efficiency and accuracy, and requires only a small number of parameters—typically between 100 and 1,000—enabling both lightweight models and high-speed inference. That said, like any machine learning potential, constructing an MTP model still involves time-consuming steps such as data generation and model training. To address this, LightPFP includes a number of features designed to reduce the burden of the development process. In the following section, we introduce some of these key features.

Automated Training Data Generation (dataset-generation Tool)

LightPFP includes a dataset-generation tool that automates the creation of training data required for building LightPFP models. It supports a variety of sampling methods—including MD simulations, strain application, and atomic substitution—allowing users to efficiently generate comprehensive training datasets with minimal manual effort.

Pre-trained models

Although MTP is known for its relatively small number of parameters, solving the optimization problem during training is not always straightforward. To support parameter optimization, pre-trained models are available—trained on large and diverse datasets covering a wide range of structures. By selecting a pre-trained model suited to the target system and fine-tuning it, users can significantly reduce training time.

Active Learning

LightPFP’s active learning feature enables efficient training data generation by starting from just a small initial dataset. It automatically performs quality checks by comparing results against PFP and identifies gaps in the training data, allowing missing configurations to be sampled effectively. This capability frees users from having to determine the required amount and variety of training data to achieve a target model accuracy.

Calculation Cases

As examples of calculations using LightPFP, we will introduce a case of a solid-liquid interface and a case of a complex alloy.

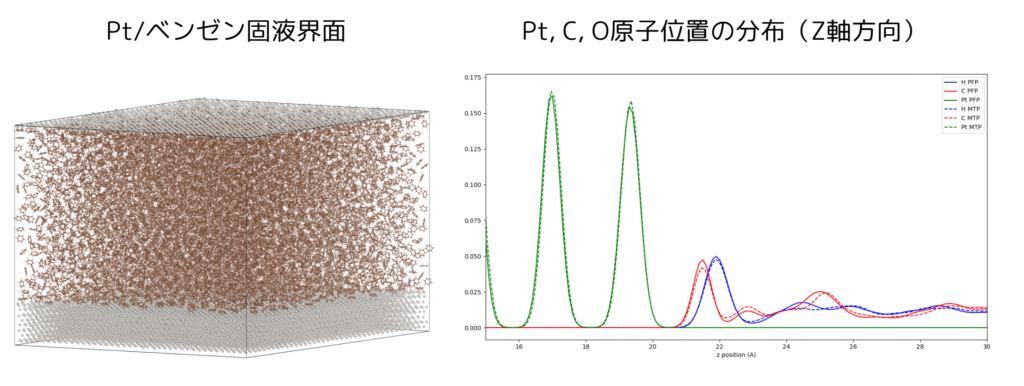

Pt(111) / benzene solid-liquid interface (84,600 atoms)

In simulations of catalytic reactions, solid–liquid interfaces often pose challenges due to system size limitations. Here, we reproduced the dynamics at a Pt/benzene interface using a large-scale model. The results confirmed that LightPFP significantly improves computational efficiency in long-timescale simulations, while maintaining the accuracy of PFP.

| MD steps | PFP [hour] | LightPFP [hour] |

| 1,000 | 0.41 | 7.01 |

| 10,000 | 4.08 | 7.09 |

| 100,000 | 40.83 | 7.89 |

| 1,000,000 | 408.33 | 15.86 |

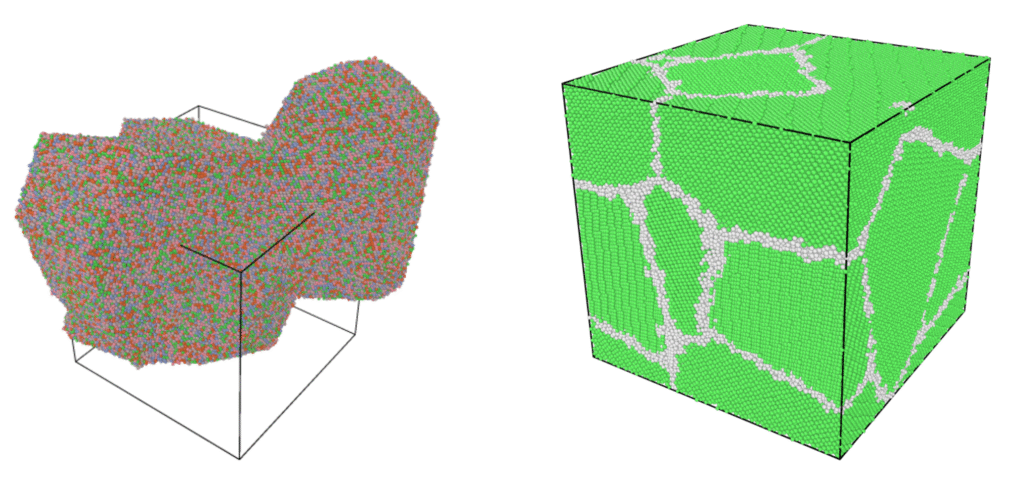

High Entropy Cantor Alloy (503,712 atoms)

In general, as the number of constituent elements increases, the potential energy surface becomes more complex, making it increasingly difficult to construct reliable interatomic potentials. In this study, we applied LightPFP to AlCoCrFeNi, a high-entropy Cantor alloy containing five elements. We successfully built a model that maintains PFP-level accuracy for key properties such as lattice constants and the equation of state, and conducted a large-scale simulation of a polycrystalline high-entropy alloy containing over 500,000 atoms.

Please contact us for other examples and specific construction methods.